Accessibility in XR

0 243

♿ Accessibility in XR — Designing for Everyone

Accessibility in XR means building virtual, augmented, and mixed-reality experiences that people of all abilities can use comfortably and effectively. Good accessible XR reduces barriers—physical, sensory, and cognitive—so more people can benefit from immersive training, entertainment, education, and collaboration.🌠Why Accessibility Matters in Immersive Experiences

XR is more than a fancy display; it changes how we perceive space and interact with content. Without intentional design, XR can exclude people with low vision, hearing differences, mobility limits, vestibular sensitivity, or cognitive differences. Accessibility in XR increases your audience, meets ethical design standards, and often improves usability for everyone.🧠Core Principles of Accessible XR

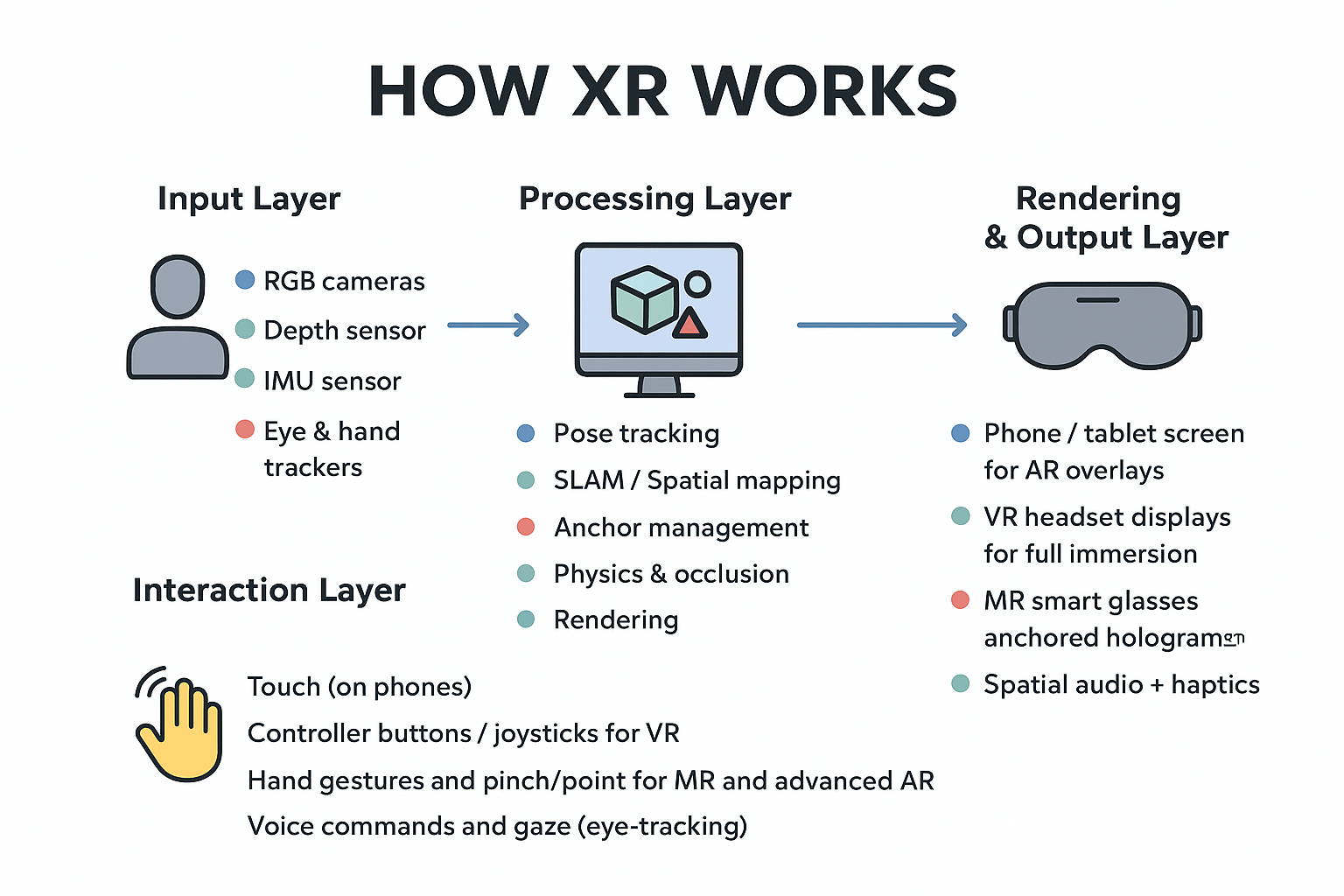

When planning accessibility in XR, follow a few human-centered principles:- Multiple input methods: provide voice, gaze, gesture, controller, and keyboard fallbacks.

- Control over motion: let users choose locomotion types and turn off or reduce forced motion.

- Perceptual alternatives: captions for audio, audio descriptions for visuals, and high-contrast visuals.

- Adjustability: scalable UI, adjustable text size, color and contrast themes, and dwell-time settings.

- Predictable interactions: simple, reversible actions and clear feedback to avoid confusion.

🔧 Practical Features to Add for Accessibility

Below are practical features you should implement or expose in your settings menu:- Captioning and transcripts for all spoken content

- Audio descriptions (narration of important visual events)

- High-contrast and large-text display modes

- Adjustable locomotion (teleport, smooth locomotion, snap turns)

- Reduced motion & simplified animations

- Customizable input mapping & sensitivity

- Toggleable assistive overlays (guides, bounding boxes, focus highlights)

🧪 Example: Unity — Toggle High-Contrast Mode (C#)

A simple Unity script pattern to switch an accessibility theme (high-contrast) at runtime. Attach to a manager object and wire it to a settings toggle.

// AccessibilityThemeManager.cs

using UnityEngine;

public class AccessibilityThemeManager : MonoBehaviour

{

public Material normalUIMaterial;

public Material highContrastUIMaterial;

public Renderer[] uiRenderers;

public void SetHighContrast(bool enabled)

{

var mat = enabled ? highContrastUIMaterial : normalUIMaterial;

foreach (var r in uiRenderers)

r.material = mat;

Debug.Log("High-contrast mode: " + enabled);

}

}

💬 Example: WebXR — Captions Overlay (JavaScript)

Minimal pattern to display live captions or transcripts in a floating world-space panel. This approach is useful for narrated scenes or multiplayer voice chat.

// Simple captions updater (pseudo-JS)

function updateCaptions(text) {

const panel = document.getElementById("captionsPanel");

panel.innerText = text;

panel.style.display = text ? "block" : "none";

}

// Example usage: updateCaptions("Doctor: Proceed to the next step.");

ðŸ–ï¸ Input Flexibility: Multimodal Controls

Offer alternative input methods and let users choose their preferred combination:- Voice commands for hands-free tasks

- Gaze + dwell or gaze + confirm for selection

- Controller and keyboard fallbacks for precision

- Assistive switch input for users with limited movement

🚶 Motion & Comfort Controls

Motion sickness is a real accessibility barrier. Provide explicit controls:- Allow teleportation as an alternative to smooth locomotion

- Offer adjustable turn increments (snap turning)

- Provide vignette or motion blur reduction options during movement

- Include a “recenter†or “standing/ seated†mode selection

🔊 Audio & Visual Alternatives

Not every user can see or hear equally well. Implement alternatives and support assistive tech:- Closed captions for all dialogue and important cues

- Audio descriptions for critical visual-only actions or cutscenes

- Volume normalization and individual channel controls (voice, SFX, ambience)

- Visual indicators for sound events (icons, pulses, subtitles)

🧠Testing Accessibility — Who & How

Real-world accessibility testing is essential. Steps to follow:- Recruit diverse testers (mobility, vision, hearing, neurodiversity).

- Run scenario-based tasks and record success rates and friction points.

- Observe physiological responses (discomfort, nausea) during motion sequences.

- Iterate based on qualitative feedback and measurable metrics.

📠Design Patterns & Small Interaction Rules

A few small rules that pay big dividends:- Make interactive targets larger than minimum touch size (in world-space units).

- Prefer single-step confirmations for destructive actions.

- Provide undo and clear exit cues at every stage.

- Keep menus predictable—avoid sudden repositioning of essential controls.

🔮 Accessibility Trends in XR

Emerging accessibility trends include AI-driven personalizations (auto-adjusting comfort settings), eye-tracking-based UI adjustments, and cloud-powered captioning/transcription services that support multiple languages and real-time moderation. These advances will make accessible XR easier to implement and more robust across contexts.✨ Final Thoughts

Accessibility in XR is not an optional add-on—it's foundational. By designing with empathy, offering flexible controls, and testing with diverse users, you create XR experiences that welcome everyone. Start with a simple accessibility settings panel, iterate with real users, and prioritize comfort and clarity: your audience—and your product—will be far better for it.If you’re passionate about building a successful blogging website, check out this helpful guide at Coding Tag – How to Start a Successful Blog. It offers practical steps and expert tips to kickstart your blogging journey!

For dedicated UPSC exam preparation, we highly recommend visiting www.iasmania.com. It offers well-structured resources, current affairs, and subject-wise notes tailored specifically for aspirants. Start your journey today!

Share:

Comments

Waiting for your comments