Voice UI in XR

0 259

🎤 Introduction to Voice UI in XR

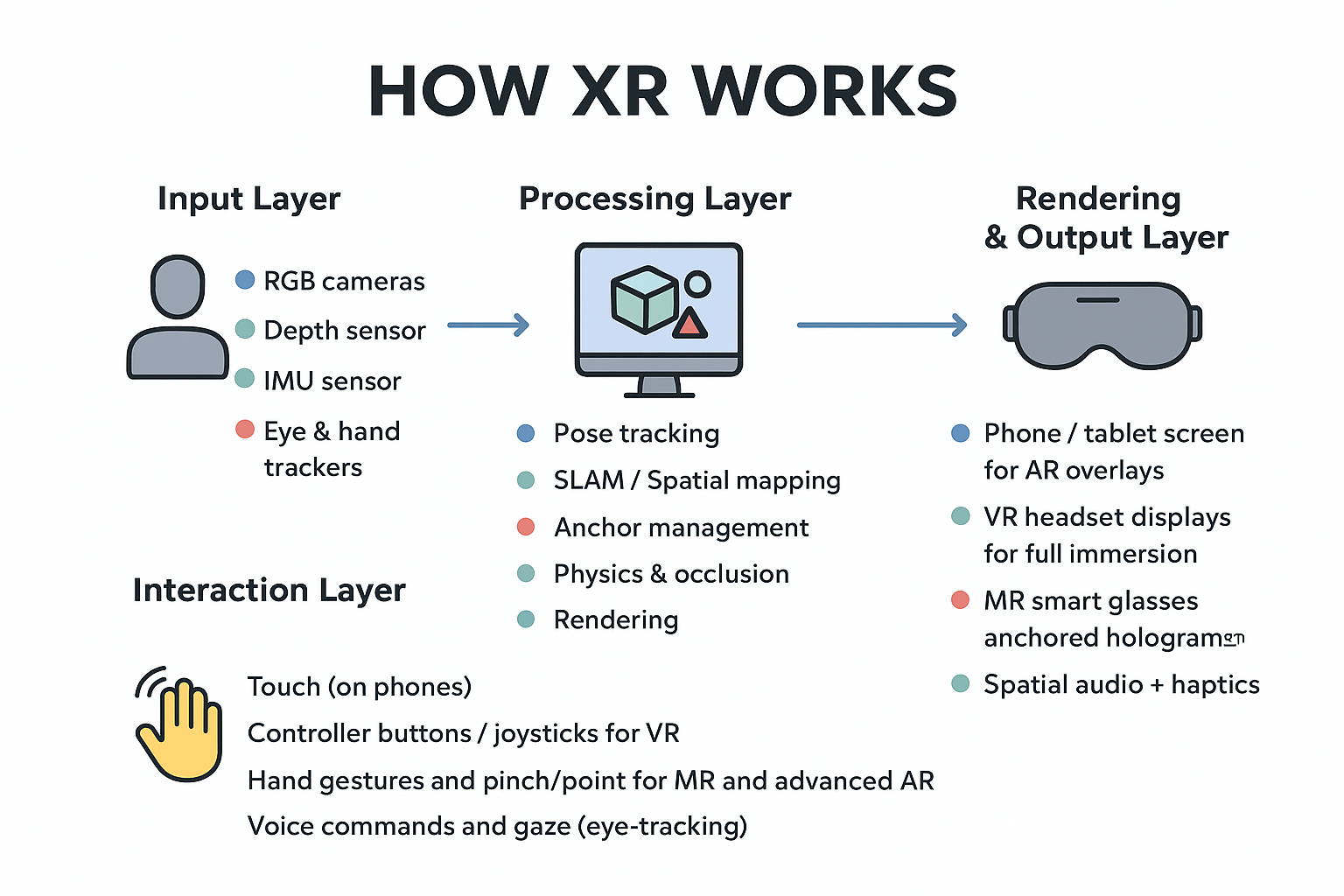

Voice UI in XR is quickly becoming one of the most intuitive and accessible interaction methods across immersive platforms. When users navigate AR, VR, or MR environments, voice commands eliminate reliance on controllers, reduce physical strain, and unlock a more natural interaction flow. Modern XR systems combine powerful speech recognition, NLP, and spatial context to understand user intent more accurately than ever before.🧠Why Voice UI Matters in Immersive Experiences

Voice interaction changes the usability landscape within XR by making tasks quicker and more conversational. Instead of pointing, clicking, or navigating complex 3D menus, users can simply say what they want. This boosts accessibility, supports multitasking, and enhances immersion for training, productivity, gaming, and enterprise XR workflows.- Hands-free interaction – Ideal for VR training or industrial AR tasks.

- Natural communication – Users can speak instead of navigating interface layers.

- Multimodal fusion – Combine voice with gestures and gaze for richer control.

🎯 Core Principles of Voice UI in XR

Designing Voice UI for immersive environments requires clarity, responsiveness, and a deep understanding of user intent. Unlike traditional mobile or desktop voice UX, XR interfaces must adapt to spatial context and 3D interactions.- Context awareness: Voice commands should react based on what the user is currently seeing or doing.

- Short + simple commands: XR users don’t always have time for long sentences.

- Error recovery: The system should offer easy fallback options.

- Feedback cues: Audio, haptic, or visual feedback improves user confidence.

💡 Voice Command Design Patterns

Just like UI layouts, voice interactions follow predictable patterns that guide user understanding and improve overall usability. Here are three of the most effective ones.- Direct Commands: “Open menuâ€, “Select objectâ€, “Start recordingâ€.

- Contextual Commands: “Resize that cubeâ€, “Highlight this panelâ€.

- Conversational Commands: “What can I do next?â€, “Show recommended stepsâ€.

ðŸ› ï¸ Example Code: Implementing Voice Commands in Unity XR

Below is a clean, editor-friendly example using Unity and Windows Speech API to trigger XR interactions.

// VoiceCommandManager.cs

using UnityEngine;

using UnityEngine.Windows.Speech;

using System.Collections.Generic;

public class VoiceCommandManager : MonoBehaviour

{

private KeywordRecognizer recognizer;

private Dictionary<string, System.Action> voiceCommands;

void Start()

{

voiceCommands = new Dictionary<string, System.Action>()

{

{ "open menu", () => OpenMenu() },

{ "close menu", () => CloseMenu() },

{ "reset scene", () => ResetScene() }

};

recognizer = new KeywordRecognizer(voiceCommands.Keys as IEnumerable<string>);

recognizer.OnPhraseRecognized += OnVoiceCommand;

recognizer.Start();

}

private void OnVoiceCommand(PhraseRecognizedEventArgs args)

{

Debug.Log("Voice command detected: " + args.text);

voiceCommands[args.text].Invoke();

}

void OpenMenu() { Debug.Log("Menu opened!"); }

void CloseMenu() { Debug.Log("Menu closed!"); }

void ResetScene() { Debug.Log("Scene reset!"); }

}

🌠Multimodal Interaction: Voice + Gaze + Gesture

Voice UI becomes significantly more powerful when paired with other input streams. Combining voice with gaze or hand gestures allows XR systems to interpret ambiguous commands with better precision.- Voice + Gaze: “Select this†while looking at an object.

- Voice + Gesture: Say “Rotate†while rotating your hand.

- Voice + Controllers: Use voice to trigger menus, controllers for precision tasks.

📦 Practical Use Cases of Voice UI in XR

Voice UI is already transforming multiple industries:- Healthcare: Surgeons use voice commands during AR-assisted procedures.

- Automotive: Hands-free VR design modeling.

- Training & Simulation: Faster step-by-step workflows.

- Gaming: Characters respond dynamically to spoken actions.

✨ Challenges When Designing Voice UI

Building a robust Voice UI system requires handling environmental noise, accents, echo, and device limitations. Developers must also optimize for low latency, especially in VR where delay breaks immersion.- Background noise disruption

- Microphone placement issues

- Speech recognition accuracy

- Language and dialect variations

ðŸ Final Thoughts

Voice UI in XR is not just an add-on—it is becoming the new standard for hands-free, intuitive, and inclusive interaction across the immersive tech ecosystem. As voice AI gets more intelligent, XR experiences will feel smoother, smarter, and far more human-centered. The future of XR belongs to natural communication—and voice is leading the revolution.If you’re passionate about building a successful blogging website, check out this helpful guide at Coding Tag – How to Start a Successful Blog. It offers practical steps and expert tips to kickstart your blogging journey!

For dedicated UPSC exam preparation, we highly recommend visiting www.iasmania.com. It offers well-structured resources, current affairs, and subject-wise notes tailored specifically for aspirants. Start your journey today!

Share:

Comments

Waiting for your comments